Study of Spontaneous and Acted Learn-Related Emotions Through Facial Expressions and Galvanic Skin Response (source)

Keywords: Facial Expression Recognition, GSR, MobileNet, learn-related emotions, CNN.

In learning environments emotions can activate or deactivate the learning process. Boredom, stress and happy–learn-related emotions–are included in physiological signals datasets, but not in Facial Expression Recognition (FER) datasets. In addition to this, Galvanic Skin Response (GSR) signal is the most representative data for emotions classification. This paper presents a technique to generate a dataset of facial expressions and physiological signals of spontaneous and acted-learn related emotions–boredom, stress, happy and neutral state–presented during video stimuli and face acting. We conducted an experiment with 22 participants (Mexicans); a dataset of 1,840 facial expressions images and 1,584 GSR registers were generated. A Convolutional Neural Network (CNN) model was trained with the facial expression dataset, then statistical analysis was performed with the GSR dataset. MobileNet’s CNN reached an overall accuracy of 94.36% in a confusion matrix, but the accuracy decreased to 28% for non-trained external images. The statistical results of GSR with significant differences in confused emotions are discussed.

Experiment

The objectives of the experiment are:

- 1. The creation of the FER and GSR datasets of acted and spontaneous emotions related to the learning process.

- 2. The emotion classification with a CNN throughout FER.

- 3. Find significant differences in the GSR Percentage of Change (P.C) between spontaneous/acted and neutrality.

Materials and Method

To create the FER and GSR datasets, the materials we used were: Haar Featured based Cascade –a machine learning based approach where a cascade function is trained from many positive an negative images– for the face recognition developed in OpenCV; DSRL Rebel T3 Cannon Camera in order to capture the facial images; Grove GSR Sensor –measure the resistance of the human skin, the resistance is measured with two electrodes of 1/4”(6 mm) dimension of nickel material attached to an electronic circuit– for the physiological data; and a 22in LCD monitor.

An audiovisual stimuli was shown to the participant to obtain the spontaneous emotion, it consisted of a visualization of video clips from films validated by participants in diverse work related emotion. Table 1. describes the film related to the stimuli and a brief description of the scene. The stimuli from the table below was validated by 100 participants in neutral and happy stimuli , boredom was approved by two studies (study 1: 241 participants, study 2: 416 participants), and stress validated by 41 participants. On the other hand, within the acted emotions, facial expression was imitated by the participants using FACS and others proposals, for an interval of 10 seconds.

| Emotion | Film title | Scene description |

|---|---|---|

| Neutral | The lover | Marguerite gets in a car, gets off and walks to a house |

| Happy | When Harry met Sally | Sally fakes an orgasm in a restaurant |

| Stress | Irreversible | Woman raped and brutally beaten |

| Boredom | Merrified and Danckert | Two men ironing clothes |

Participants

A sample of 22 subjects of Hispanic ethnicity participated in the study: 9 females and 13 males with a range from 18 to 62 years of age.

Procedure

For the creation of the dataset, we proposed a technique that consisted of two sessions: spontaneous and acted. Firstly, the procedure of the experiment consisted in providing instructions to the participants about the session and how to position their middle and index phalanges over the GSR electrodes. During the session, participants watched a series of films (stimuli) while raw data was recorded –photos taken at 10 FPS and GSR recording at 10Hz.

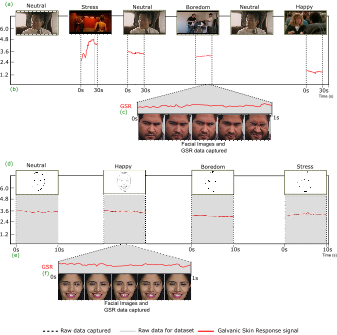

The below figure illustrates the sequence of spontaneous and acted sessions. Fig. (a) shows the spontaneous session; the stimuli consisted in the following sequence: Neutral −→ Stress −→ Neutral −→ Boredom −→ Neutral −→ Happy; where at the end of every stimuli, neutrality was induced to the participant to generate the correct desired emotion. Fig. (b) indicates the raw data taken in an interval of 30 seconds from the scene where the strongest emotion appeared. Fig. (c) facial expression and GSR reading captured at 10 FPS and Hz respectively for spontaneous emotion. For the acted emotions (Fig. bottom timeline), participants were instructed to carefully read the instructions for each emotion by performing the imitation during 10 seconds (Fig. (d)). Fig. (e) indicates the raw taken in an interval of 10 seconds. Fig. (f) facial expression and GSR reading captured at 10 FPS and Hz respectively for acted emotion

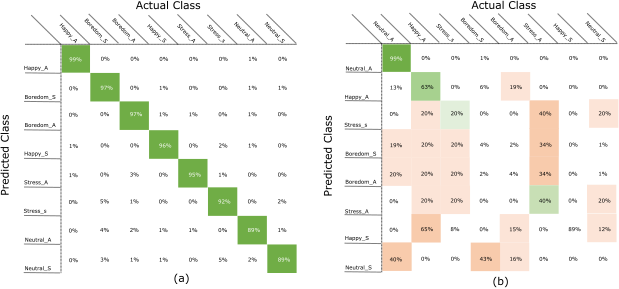

The emotion classification was developed with MobileNet Architecture on TensorFlow; 1,840 images were used for the CNN, the images from the FER dataset were resized at 224 x 224 for the input layer, afterward CNN classified the images into 8 different classes: “Happy A”,“Happy S”, “Stress A”, “Stress S”, “Boredom A”, “Boredom S”, “Neutral A” and “Neutral S” (“ A”, stands for Acted emotion and “ S”, stands for spontaneous emotion). For the external images, a total of 80 images (10 images per class) were used to test the CNN; the images were taken from social networks sites, where users declared their emotional state.

Results and Discussion

The classification of the trained dataset brings excellent results: training precision: 100%, validation accuracy: 96.5%. On the other hand, the classification for external images with previously trained dataset shows an average poor performance of 28.75%. To evaluate performance of MobileNet, we analyzed classifier based on confusion matrix analysis (fig. below), where a correct classification was done with an overall accuracy of 94.6%.

Conclusions

A classifier of learn-related emotions is a powerful tool for intelligent learning environments, the recognition of emotional states could improve the reinforcement learning of a focus group for education (e.g., educational task and tools) and gaming (e.g., reaction on different levels of immersion). The contribution of this work lies on:

- 1. A new technique to generate a dataset that employs effective stimuli for FER and GSR.

- 2. Two dataset, FER: 1,840 facial expressions images;GSR: 1,548 registers –baseline difference between emotions and neutrality..

- 3. Trained model of spontaneous and acted emotions.

- 4. The possibility of GSR may enhance the classification of the CNN.

Future work will consist an including other emotions related to learning (e.g., confusion), as well as incorporating the GSR data into the RGB value channels of the trained images, furthermore, re-train the CNN and contrast results.